Social Collaboration

In this prototype, players can join into a space meeting room, where they have randomly colored avatars which mimic the user’s eye movements. Players can create notes by speech-to-text, move the notes across a kanban board using gaze telekinesis and of course dispose of them with the space disposer.

Players move around using the touchpad or joystick (index controller) with their right controller and can choose to toggle eye tracking on/off with F1, toggle gaze visualization on/off with F2 and toggle pointer selection on/off with F3.

| Device | Version | Download |

|---|---|---|

| HTC VIVE Pro Eye | v1.0.3 |

Table of Contents

Running the Prototype

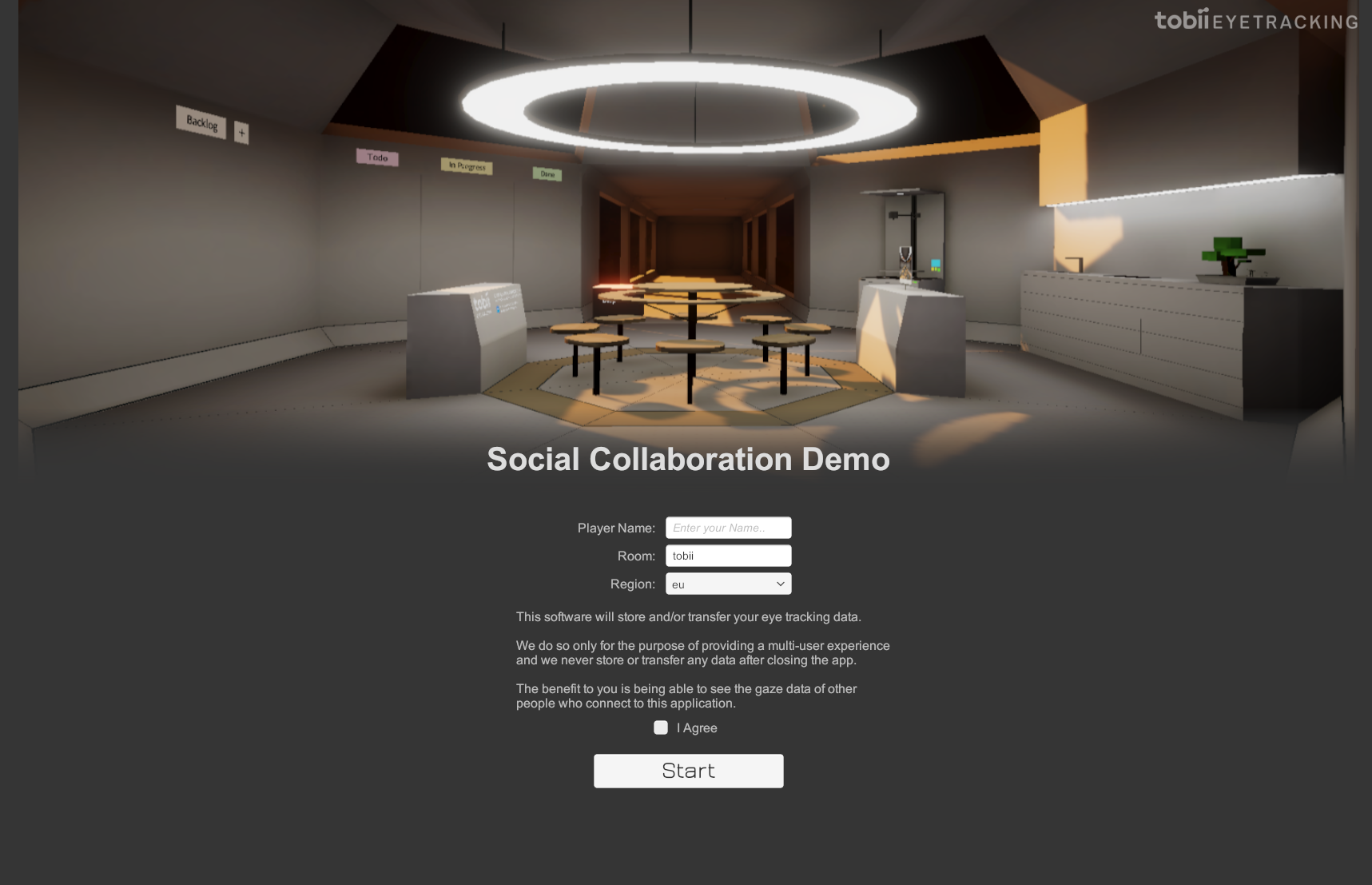

When running the binary, users are met with a launcher screen on their desktop. Here they can input a player name and which room they wish to join as well as the region. Users also have to accept that their eye tracking data is transferred over network according to Tobii’s Data Transparency Policy.

NOTE: To join the same session as other users, the room name and region needs to match with the users you wish to join.

Social Avatars

Seeing others’ eye movements infuses your social avatars with life and naturally adds invaluable social context to your interactions. Read more about best practices when creating social avatars in our social learn section.

With the addition of eye movements you can gain insights into the other user’s mind, just like in real life. You also become aware of the context another user is talking or thinking about by seeing where they look.

Use Case Examples:

- It’s easier to relate and socialize with other avatars when they behave similar to a real human being.

- It is immediately apparent when someone is paying attention to you, others, or thinking/remembering, making conversations easier and more natural.

- A person speaking or presenting something allows the audience to understand what the person is talking about or referring to, solely based on their eyes.

Visibility in VR

Eye size, avatar distance and headset resolution all play a part in how easy or how difficult it is too see another avatar’s eye movements. Read more about this in our learn section.

In the video, it’s hard to make out the eye movements of the avatar when it is farther away. Initially when designing our room, the table was bigger than it is here and our initial thought was to spawn players around the table with fixed seating. This quickly showed a bad design because it would make it hard to see each other’s eyes in some cases so we allowed players to move around freely and scaled down the table a bit to be able to easily see all other avatars’ eyes.

With the resolution in headsets quickly improving, we expect that this will become less of an issue over time.

Shared Gaze

To further improve understanding context by where someone is looking, we added a way to share the gaze of avatars using a gaze ray visualization that users can toggle on or off into the prototype. Read more about shared gaze.

Being able to toggle on other avatars’ gaze rays increases context understanding without the need of extra steps (like adding a laser pointer).

It’s also useful from a presenter’s point of view, to be able to tell the attention of the audience.

Telekinesis

In the prototype, users can freely move around notes and images using telekinesis. They can look and grab notes or images, simply by looking at them and grabbing them with either hand and then moving the controller. Read more about telekinesis in our learn section.

We have added a fallback method to do this with a pointer for users without an eye tracking headset and to be able to compare the two interactions as you can see in the video.

It feels great to move objects around in a zero gravity environment with a high air drag. We call it “thick air”. When released, the objects de-accelerate somewhat quickly and naturally “stick” to surfaces or wherever you place them within the 3d environment.

Gaze selection of objects has many advantages over hand ray selection. It removes one step of interaction. It is faster, easier, and simply more enjoyable and immediately feels very natural. It greatly reduces user fatigue since any hand position can be used to manipulate any object, meaning less awkward and more relaxed arm postures – this is especially true of “hard to point at” objects, like small targets or objects located very high or very low. All of these are simple cases for gaze interaction to solve when combined with Tobii G2OM.

Creating and Disposing Notes

User’s can create their own notes using either text entry in the desktop overlay or with speech-to-text in the application.

Of course when having a way to add notes, we needed a way to dispose of them so we added a disposer to the room as well.

We believe that “look and speak” is a powerful user interaction, but has two glaring UX flaws. Firstly, speech recognition abilities are still less-than-perfect. Secondly, the social implications of “talking to no one” has prevented voice assistants from gaining traction in public spaces.

Since our text interaction is limited to post-it sized notes, it is simple (and fun, in fact) to toss an incorrect note into the disposer, mitigating our first UX issue. If the user still needs precise text entry, they are free to type on their computer keyboards and spawn whatever notes they desire (although this breaks immersion a bit).

The second UX issue is mitigated by the context of the prototype and the availability of user attention awareness from eye tracking – we’re already in a group setting with a shared environment and a shared sense of attention. You can safely talk “talk to no one” without confusing or startling anyone, because everyone always knows who/what you’re talking to. We have noticed though, that it’s sometimes hard to have a discussion when someone else is creating a note by talking, so one option could be to mute the microphone for everyone else when someone is creating a note using speech-to-text.

Further UX improvements come naturally from the improvement of speech recognition algorithms, but more research into “look and speak” interactions, including text entry, and gaze-enabled keyboards is still needed.

Expanding the Prototype

We have a huge backlog with ideas and concepts for this type of social application and we have only scratched the surface of what’s possible. The goal for us was to create a standup and meeting tool for our team since we’re all working remotely due to COVID-19. For internal use, we have have also added the functionality of saving/loading rooms via cloud but this has been removed from the released prototype.

When it comes to how we would want to expand this prototype we have several ideas. Some include polishing it from a prototype to a more finished product, like fixing the bugs, adding new avatars and tweaking the interactions. Other ideas include some more exploration work, like how to improve the creation of new notes or how to add new types of attention and gaze visualizations for a social scenario such as this. Lastly, integration of this prototype into a pre-existing standup or kanban tool is relatively trivial, but would require more UX work.