Input Paradigms

3-to-2 Step Interactions

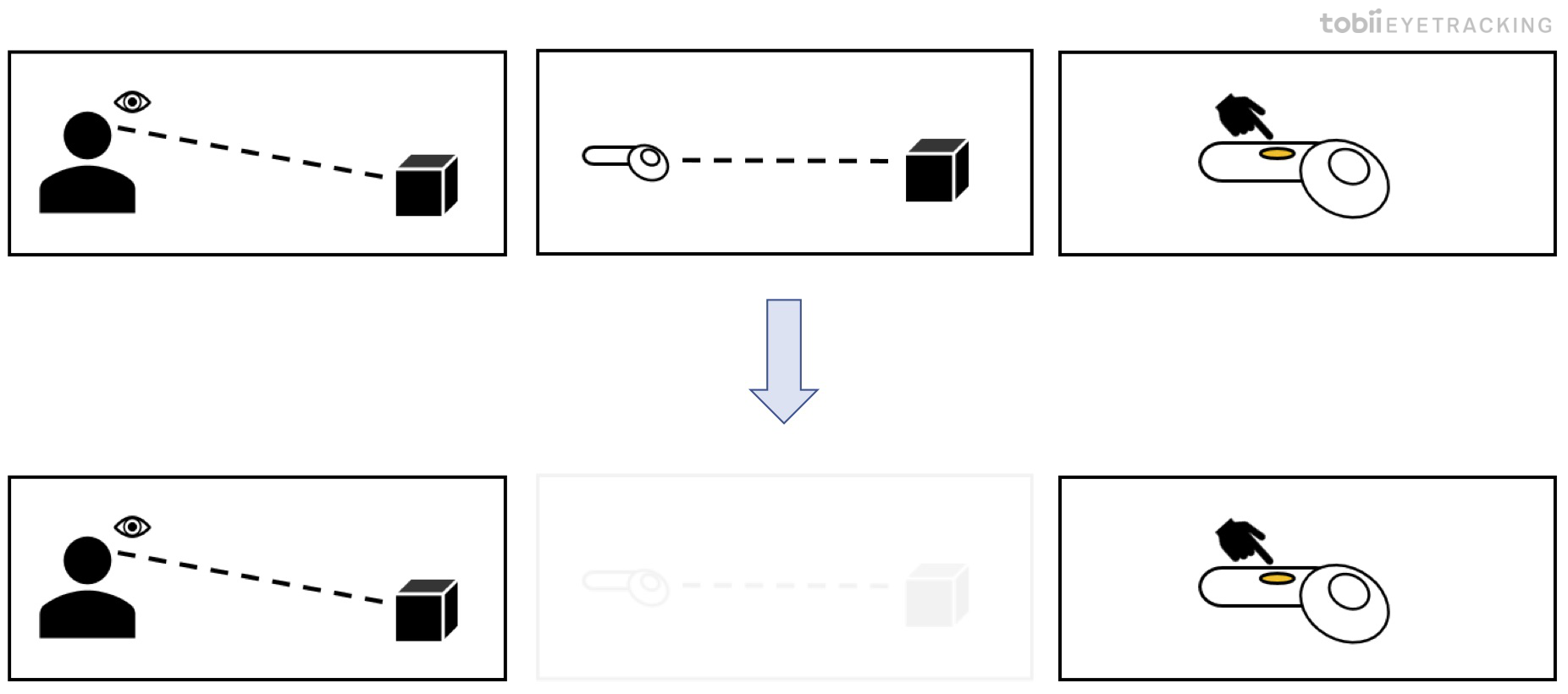

Without eye tracking, activating something in VR is a three-step-process:

- Look at an object.

- Point at the object with controller/headset.

- Press a button to activate.

With eye tracking the second step of pointing with the controller/headset can be removed entirely, reducing the process to only two steps.

This also means that you have freed up a hand (or your head), that would have otherwise been occupied with pointing.

Replacing Head Pointing with Gaze

Moving your head to aim can be difficult and straining.

This can often be replaced with a Gaze Interaction State. For example, picking up an object by looking at it and pressing a button works well, but can feel straining and unnatural if done with head pointing.

Head pointing also requires a reticle to be visualized so that you can relate your head’s rotation to what you’re aiming at in the scene. This is redundant using eye tracking, you simply look at the element you want to select.

Keep in mind that actions with a high cost of error, should be done with Gaze with Explicit Activation if possible, e.g., looking and pressing a button.

Controller Complements Gaze

Eye tracking can usually replace the pointing of a controller but not the explicit activation signal of pressing a button. Without the need of having to point with a controller, the interaction becomes easier and less physically straining.

It’s important to note that interfaces created for a pointing device, like a VR controller, won’t necessarily work by replacing the pointing device with your eyes. The interface needs to be designed with eyes in mind.

Locking onto Targets

When adjusting or manipulating an element selected by gaze, that changes the visual appearance of another element, the eyes will be drawn to the visual change occurring. The interaction will then be cancelled if there is no lock-on mechanism in place.

Locking onto elements can be done in different ways. For example, by using the Gaze with Explicit Activation state, like looking at an element and then holding down a button. This allows the user to freely manipulate the first element (e.g., a UI slider) while still looking at the visual changes occurring and then release the button to stop the interaction. However, this will require the user to think about whether they are in the mode “lock-on” or “non-locked”.

We have seen that if the lock-on mechanism is touching the touchpad (and then sliding to change the value), the user can sometimes unconsciously rest their thumb on the touchpad and get stuck in a “lock-on” mode without being aware of it.