Signals & Quality

The eye tracker produces various signals you can eventually use inside your application, where the most commonly used raw signals are the gaze origin and the gaze direction. However, because the eye tracking signals comes from actual measurements, they are subject to noise.

Depending on your use case and goals, you might want to filter your signal accordingly. If you want to be able to determine what the user is looking at, we have created Tobii G2OM to make it easier for you. If you want to create social avatars, we suggest how you filter the eye signals in our social design section.

Accuracy and Precision

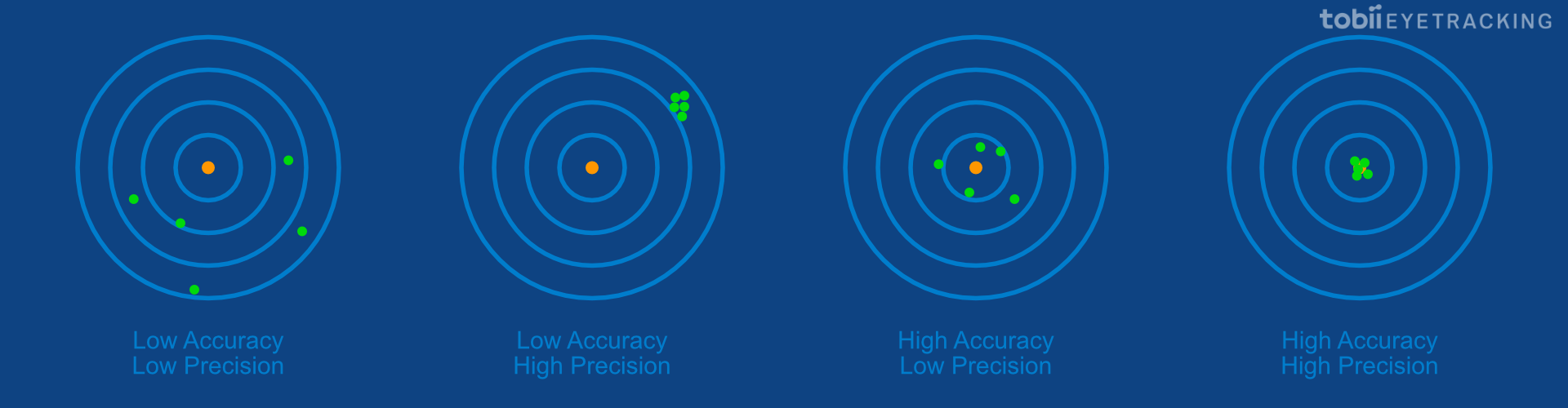

When dealing with low-level eye tracking data, the two most important concepts to keep in mind are signal accuracy and precision:

- Precision is defined as the ability of the eye tracker to reliably reproduce the same gaze point measurement.

- Accuracy is defined as the average difference between the real stimulus position and the measured gaze position.

The user is looking at the orange dot in the center for each target.

A precision error means your signal looks “noisy”, but can often be filtered away at the cost of latency. An accuracy error means your signal looks “generally off target” and this type of error is harder to deal with, both in terms of processing and interface design.

It is important to note that both accuracy and precision are dependent on the eye tracker, user and situation. Read more about what affects eye tracking accuracy and precision.

In this video, you can see gaze points recorded in a VR headset, where the user looks between different orange dots within the targets. The targets are positioned in the center (0°), at 12.5° and at 25° away from the center, with circles positioned at 1°, 3° and 5° angles around the orange dots.

Further Reading

- Eye tracking performance assessment for VR/AR headsets and wearables (Tobii Whitepaper, October 2021)