Tobii G2OM

Trying to determine what the user is looking at is not a trivial problem and most solutions come with a list of drawbacks. We have experienced these drawbacks first-hand which is the reason we built Tobii G2OM (Gaze-2-Object-Mapping).

Tobii G2OM is a machine-learned selection algorithm, accurately predicting what the user is looking at. It helps developers focus on creating great eye tracking experiences, while also giving users a more consistent experience.

Tobii G2OM comes bundled in our Tobii XR SDK which can be found on the downloads page, and is built from years of experience working with eye tracking, trained on millions of data points.

We have created a demo to showcase the benefits of Tobii G2OM, which you can try here.

The Challenges of Selecting Objects

For many use cases where eye tracking is used, it is important to know what the user is looking at, creating the need for an accurate object selection algorithm.

Determining which object the user is looking at is traditionally done by casting a ray from the user’s eyes to see what the ray intersects with. How well this method matches what the user is actually looking at depends on a number of factors such as anatomy, headset make, momentary headset fit etc. The method of ray casting is also not a perfectly accurate representation of how our eyes work in real life, where we don’t focus on small pixel-sized points.

To improve the reliability of ray casting, one approach is to add additional logic to it to make it more sophisticated. You can also design your scene with the downsides of ray casting in mind. Both of these methods are difficult, time-consuming and require knowledge and experience with eye tracking. This is something we have experienced first-hand and is the reason why we built Tobii G2OM, which is a machine-learned selection algorithm trained on millions of data points to be able to determine what the user is looking at.

Example Scenario

The video below shows a user looking at a small object using 3 variations of object selection methods. The object turns red when the system considers it selected.

-

Raycast, original collider size: This example shows the problem of using ray casting as a selection method. Even though the user is always looking at the object, the ray sometimes misses the object, making the system incorrectly believe that the user is not looking at it.

-

Raycast, double collider size: In this example, we’re still shooting a ray from the user’s eyes but with a larger collider which the ray can intersect. The double collider size solves the problems of just shooting a ray, but creates other selection issues, for example when objects are near other objects, or partly overlap. This can be designed around by placing the objects far apart and avoiding overlapping colliders, but takes a lot of time and becomes increasingly complex for scenarios where the players and/or objects that can move around. This approach also introduces false positives where the user is not looking at something but the system believes they are.

-

Tobii G2OM, original collider size: This shows our machine-learned selection algorithm. Even though the depicted gaze ray cast from the user’s eyes does not hit the object, Tobii G2OM has been trained on millions of data points to be able to tell that the user is in fact looking at the object, without the need of adding extra logic such as having increased collider sizes.

There are other approaches of improving ray casting. For example, several rays be cast from the user’s eyes or the size of the colliders can be dynamically adjusted, based on distance from the user. You can also improve the selection quality by allowing the selection to linger when the ray misses an object for a short period of time, but this will make the selection algorithm react slower when the user is switching between objects. We have tried many ways of solving the issues with selecting objects, and they have all been time-consuming and has created a need to adjust and configure individual objects and scenarios, making development more complex.

Tobii G2OM

Tobii G2OM has been created to allow for a robust eye tracking experience, for users and developers alike. It is a machine-learned selection algorithm, trained using millions of data points in order to determine what the user is looking at, in any scene or scenario.

These are the key reasons why you should use Tobii G2OM:

-

A better eye tracking experience for users, by more accurately predicting what the user is looking at.

-

Focus on creating great eye tracking experiences, without having to invest the time in creating a robust gaze selection algorithm.

-

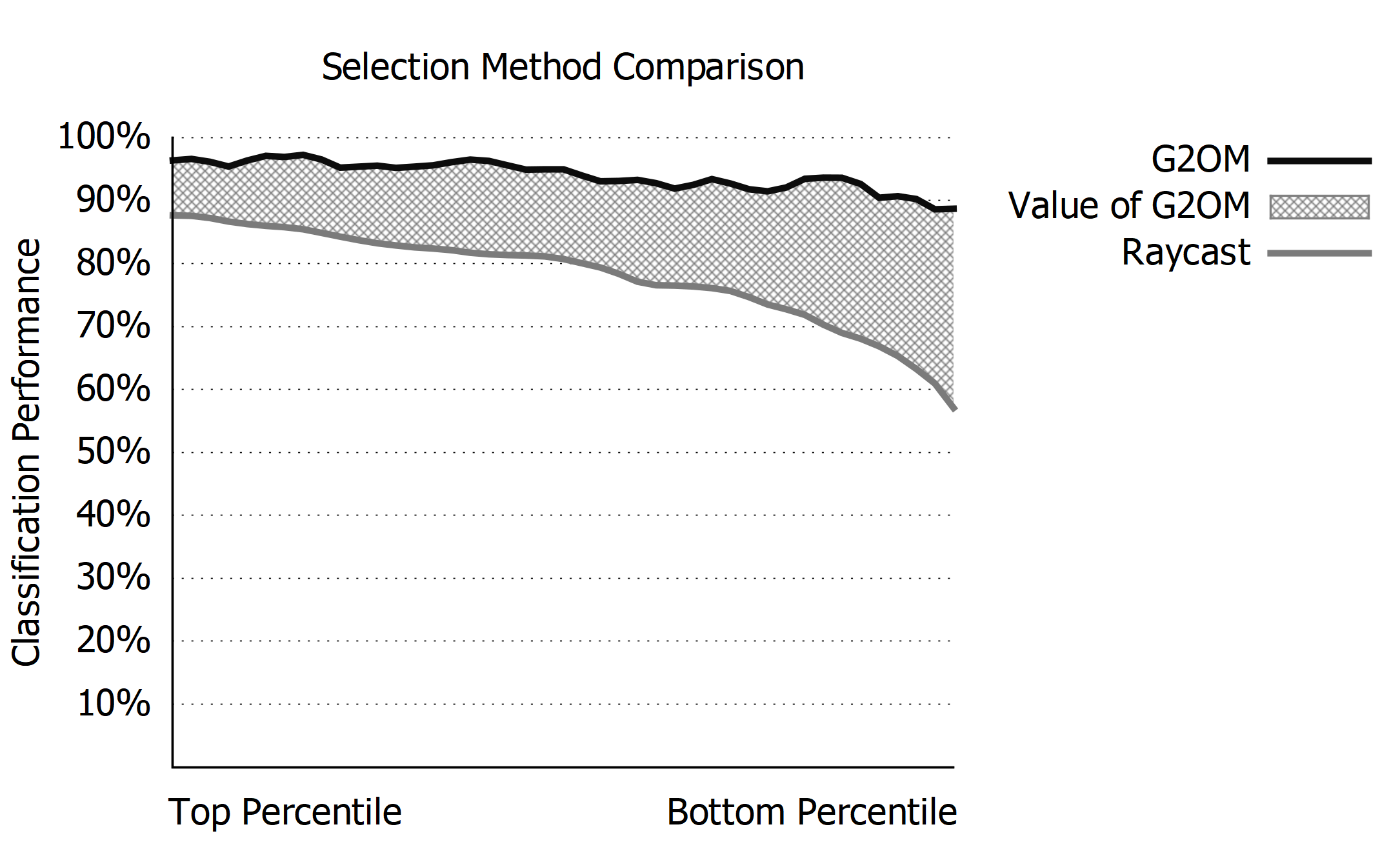

A more consistent experience between different users, allowing you to target a broader userbase with your application. This is visualized in the graph below, highlighting the G2OM value compared to ray casting.