Social

The social example scene contains a ReadyPlayerMe half-body VR avatar that uses eye tracking to move the eyes, eyelids, and eyebrows. You can look at yourself in the mirror to see your eyes move, and you can look up and down to affect the avatar’s eyelid and eyebrow blend shapes.

Check out our design section to learn about how to design for the social use case or eye tracking in general.

Compatibility

This particular Unity Sample is compatible with the following headsets:

| HMD | Compatible | Notes |

|---|---|---|

| HP Reverb G2 Omnicept | Yes | Getting Started |

| Pico Neo 3 Pro Eye | Yes | Getting Started (Make sure Step 6 has been addressed in the sample scene) |

| Pico Neo 2 Eye | Yes | Getting Started (Make sure Step 6 has been addressed in the sample scene) |

| HTC VIVE Pro Eye | Yes | Getting Started |

| Tobii HTC VIVE Devkit | Yes | Getting Started |

Table of Contents

Running the sample

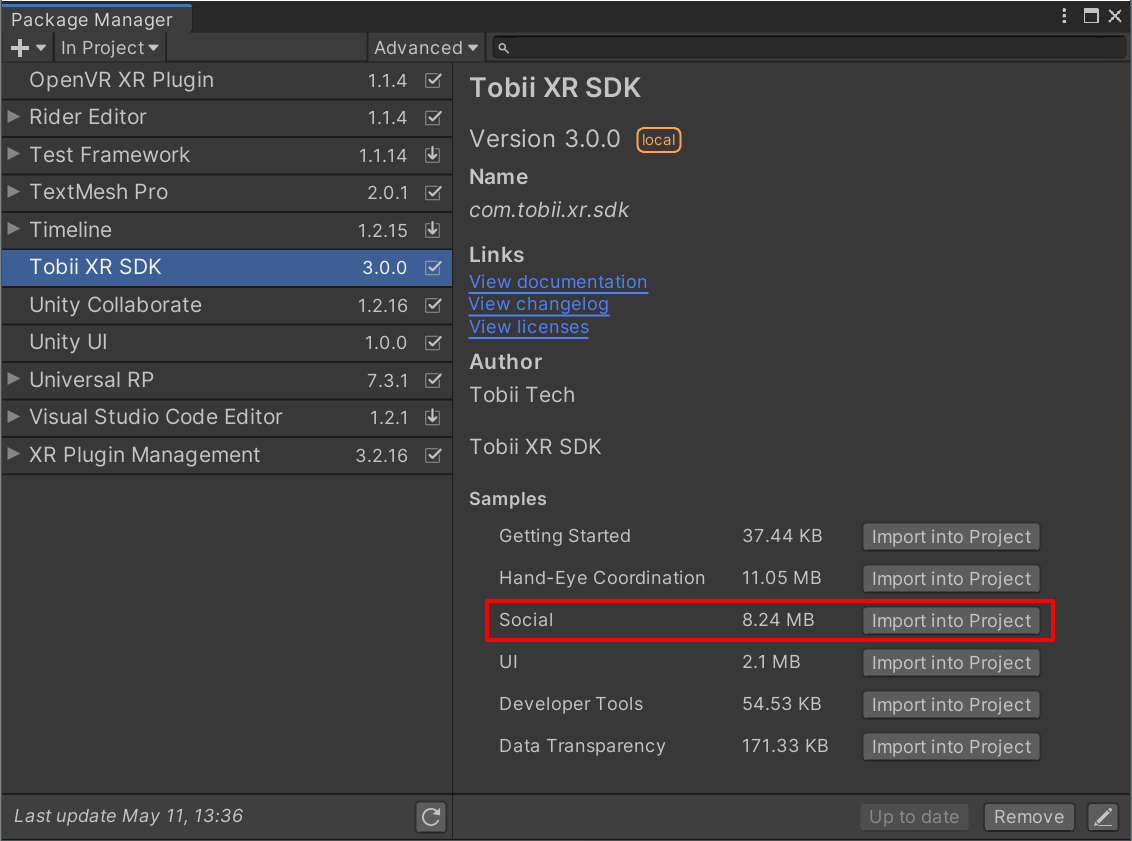

Run the Example_Social scene found under the Samples folder after importing the sample using the Package Manager.

Scene Contents

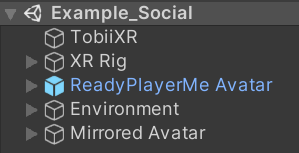

The root objects in the scene are:

- TobiiXR contains

TobiiXR_Initializerwhich initializes the SDK and Tobii G2OM. - XR Rig contains the position-tracked XR camera.

- ReadyPlayerMe Avatar contains the player’s avatar.

- Environment contains the wall and lighting.

- Mirrored Avatar contains a copy of the player’s avatar which follows the player’s movements, to mimic the effect of a real mirror.

Avatar

The avatar was created using ReadyPlayerMe, a great service for adding customizable avatars to your app or game.

The relevant elements of this avatar for eye tracking are the eye bones and the SkinnedMeshRenderer on the face.

Eye Bones

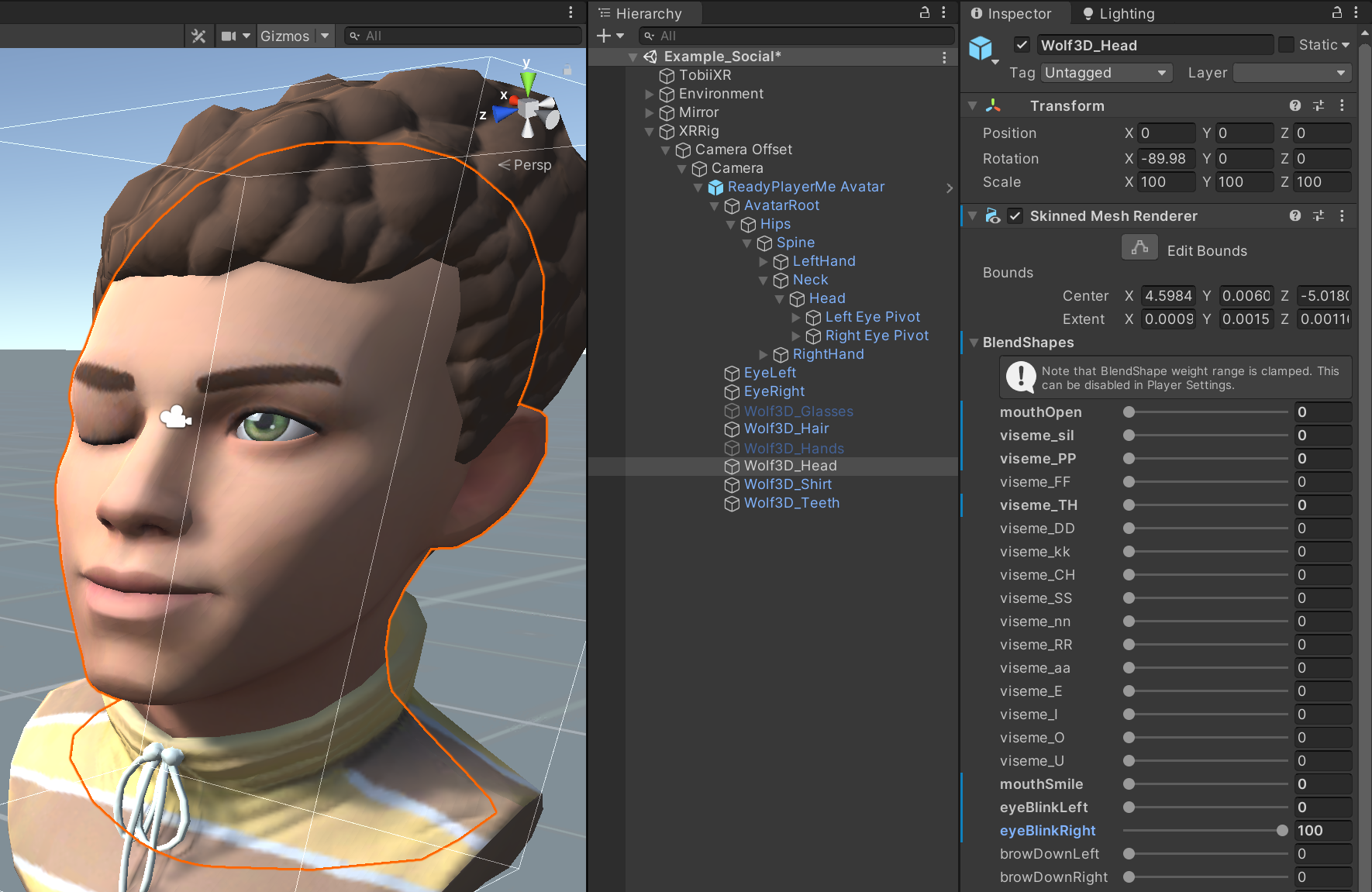

Since this avatar uses a skinned mesh, the eyes are controlled by rotating the eye bones, instead of rotating the eye meshes directly. The bone structure is located in the AvatarRoot object.

The TobiiAvatarEyesAndBlendShapes script assumes that the eye bones are pointing straight forward in the local z direction in the hierarchy. Since the ReadyPlayerMe FBX file had eye bones that were not pointing straight forward, two extra empty objects were added (Left Eye Pivot & Right Eye Pivot) which contain the eye bones. These game objects should be rotated using data from the eye tracker.

Face SkinnedMeshRenderer

The avatar’s face mesh (Wolf3D_Head) uses a SkinnedMeshRenderer which has many blend shapes for animating different parts of the face. The eye tracking data is used to animate following blendshapes:

- eyeBlinkLeft & eyeBlinkRight which lower the eyelids

- eyesLookUp & eyesLookDown which raise and lower the top and bottom eyelids

- browInnerUp, browOuterUpLeft and browOuterUpRight which raise the eyebrows slightly when looking up

In this avatar implementation, there is one known issue: looking down while closing one eye causes the other eyelid to open more. This will likely be fixed in an upcoming release.

Scripts

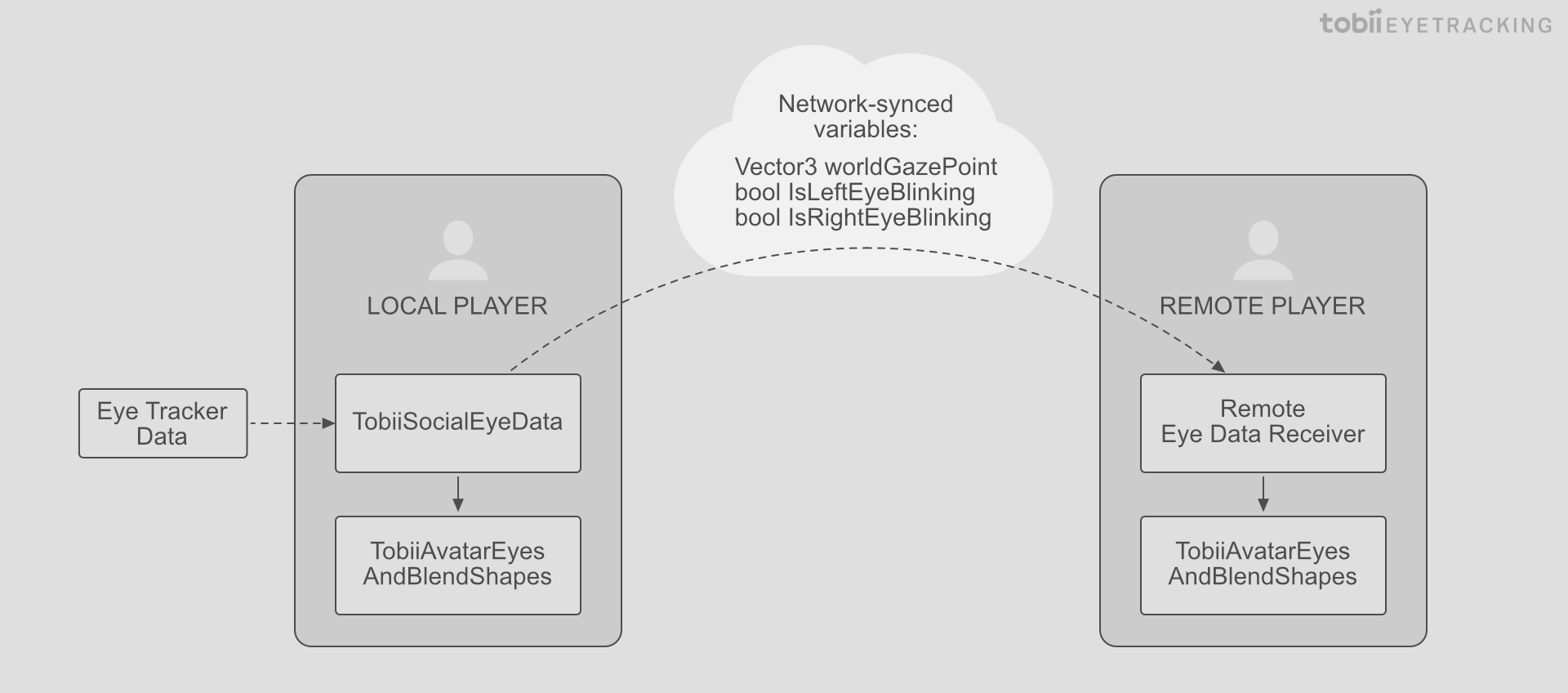

The example scripts are set up in a modular way that makes them easy to use with networking code. The following diagram illustrates how the scripts are meant to work together and how they can send minimal variables over the network. Each script is described below in more detail.

TobiiSocialEyeData

This script gets the combined eye direction vector and blink booleans from the eye tracker. The direction vector is converted to a world-space coordinate 2 meters away from the camera. Afterwards, a 1 Euro Filter is used to smooth the world gaze point, in order to make the eyes appear more stable while still maintaining a good saccade speed.

If desired, a dynamic convergence distance can be used instead of the fixed 2 meter distance, which would allow the eyes to converge realistically on objects that are close to the eyes. There are two ways this can be done:

- On some Tobii XR devices, a convergence distance API call is available. Convergence distance is difficult for the algorithm to estimate because it needs to base it off of micro-movements of the pupils, so this value needs to be heavily smoothed.

- A raycast can be made in the gaze direction towards objects in the scene, and the resulting raycast distance can be used. This should also be heavily smoothed, as the raycast can easily flicker between near and far distances when looking at the edges of objects.

TobiiAvatarEyesAndBlendShapes

This script retrieves eye data from TobiiSocialEyeData script, and it uses this data to rotate the eye bones and change the face blend shapes.

Rotating the eyes

The eye bones are simply rotated to look at the world gaze point. For the local player, the world gaze point is calculated in TobiiSocialEyeData, whereas for remote players this value can be received from other players over the network.

Setting the face blend shapes

The blend shapes are split into 3 sections in the code:

- Blink blend shapes: The blink value is retrieved from the data source, and the LeftEyeLid and RightEyeLid blend shapes are set using an eyelid speed value.

- Looking up blend shapes: When the eyes look upwards past the lookUpBlendShapeStartAngle, several blend shapes are set, which move the eyelids and eyebrows upwards. Since the eyebrows should only move slightly upwards, an EyeBrowBlendShapeFactor determines how much the eyebrows should move.

- Looking down blend shapes: When the eyes look downwards past the lookDownBlendShapeStartAngle, the EyesLookDown blend shape is set, which moves the eyelids down slightly.

In all cases, the blend shapes use an acceleration/deceleration curve to achieve more realistic movement, where muscles ’ease in’ and ’ease out’ instead of immediately changing speed.